Discover how foundation models are revolutionizing NLP, shaping the future of AI by enhancing understanding, decision-making, and accessibility.

Since its inception, Natural Language Processing (NLP) has played a pivotal role in the study of AI, helping to close the comprehension gap between humans and machines. The groundbreaking foundation model of artificial intelligence lies at the center of this technological upheaval. This revolutionary paradigm is redefining not only how robots perceive human language but also how they learn, make decisions, and interact with the world, and is thereby setting a new trajectory in the field of artificial intelligence research.

What is NLP?

Understanding what natural language processing is and why it’s important is necessary before getting into the dynamics of foundation models and their impact.

The domain of artificial intelligence, specifically referred to as natural language processing (NLP), allows machines in comprehending, interpreting, and potentially generating human language.

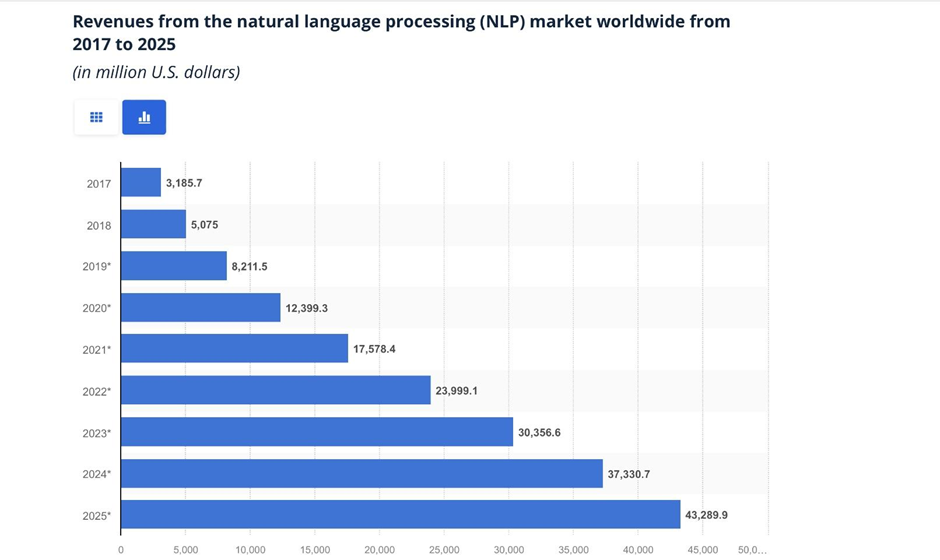

The natural language processing (NLP) industry is set for a surge in the upcoming years. As per Statista, there would be a whopping fourteenfold increase from the NLP market’s value in 2017 of approximately three billion dollars, taking it to an impressive 43 billion dollars by 2025.

It encompasses a diverse array of operations, such as the intricate task of natural language parsing, which involves the meticulous dissecting of sentences into their constituent grammatical components to facilitate a deeper comprehension. Additionally, it encompasses the sophisticated process of semantic analysis, which entails discerning the implicit implications conveyed by various words and phrases. The intricate process at hand is what facilitates our ability to engage in dialogue with voice assistants such as Siri or Alexa, as well as swiftly analyze substantial amounts of textual data within mere seconds.

The Foundation Models: What are they?

Foundation models, as the name suggests, provide a ‘foundation’ of pre-training on a broad range of internet text. These models, which are trained on big and diverse datasets, lay the groundwork for a wide variety of uses, including translation, content generation, and more.

Natural language processing has experienced a significant paradigm shift as a direct outcome of these recently built AI research foundation models. The revolutionary nature of these models stems from their extreme malleability.

Foundation models are no longer future concepts, they’re a reality and are integrated into everyday tools. Take GitHub’s Copilot, for instance, which uses OpenAI Codex to help coders code better. It’s not just about making developers feel more productive, it actually helps them get more work done. A study from GitHub found that coders who use Copilot managed to increase their productivity by a staggering 55% compared to those who didn’t use the tool.

Implications for Natural Language Processing

Paradigm Shift in Training AI Models

Traditional AI models were task-specific, needing specialized training data and often resulting in models that performed well in one environment but poorly in others. The foundation models have completely flipped this perspective on its head. They provide a more flexible and efficient method of training AI models due to their capacity to pre-train on massive datasets.

Improvements in Language Understanding and Generation

Improvements in AI’s capacity to comprehend and produce human speech have been substantial since the advent of foundation models. These models, educated on a massive corpus of internet material, can understand nuanced language, deduce meaning from context, and produce prose that is remarkably close to humans in both coherence and context.

Revolutionizing Decision-Making Processes

Beyond linguistics, foundation models have had a significant impact. In the field of decision-making, they are also creating waves. Foundation models are improving decision-making capacities across varied areas, from supporting doctors in making diagnoses by reading medical information to assisting financial analysts in predicting market patterns.

Democratization of AI

The use of foundation models is helping to make artificial intelligence accessible to a wider audience. They are lowering the barrier to entry for NLP for businesses and individuals without considerable machine learning experience by giving a base model that can be fine-tuned for diverse activities. This ease of use is fueling a wave of innovation and allowing previously inaccessible individuals and businesses to reap the benefits of artificial intelligence.

Shift in AI Research Focus

Researchers in the field of artificial intelligence can now devote their time and energy to refining and applying already existing models rather than developing them from the start. Developing methods to fine-tune these models, understand how they function, and handle the issues they offer, particularly in the areas of ethics and data protection, is an important topic of study at the moment.

Enabling Multimodal AI

Using NLP is just one way to use the foundation model. They provide the groundwork for multimodal AI systems that can process and produce data in text, visuals, and audio. This extends the possibilities of AI and points to a future when machines might mimic human behavior in social settings.

These changes, made possible by foundation models, mark a watershed moment in the development of AI. Although there are still problems to address, it is clear that these models have the potential to make a significant impact on the world. They are laying the groundwork for a future where machines can have meaningful interactions with us.

Key Attributes of Foundation Models

Foundation models stand out not only for their remarkable ability to comprehend and generate natural language but also for their adaptability. From analyzing customer sentiment in reviews to forecasting market movements using data from the news, these models may be fine-tuned for a variety of purposes.

This flexibility has allowed businesses and researchers to tap into the potential of cutting-edge NLP without requiring substantial specialized knowledge in machine learning. There has been a recent uptick in the democratization of AI, which is in part due to the adaptability and flexibility of foundation models.

Addressing the Challenges: Ethics, Transparency, and Data Privacy

Despite the fact that foundation models hold a lot of potential, there are several issues that must be considered and dealt with.

Since these algorithms train on internet data, which may contain biased or unsuitable content, ethical questions arise. There are serious ethical concerns that these biases could become systemic in the model.

These AI models present a barrier to transparency due to their ‘black box’ character, in which the decision-making processes are not totally transparent. The study of model interpretability is becoming increasingly important in the quest to make AI a reliable and trustworthy resource rather than a mysterious force.

Finally, using massive amounts of online content for training these models raises data privacy problems. Data anonymization helps prevent unwanted disclosure, but mistakes can still happen.

As our reliance on foundation models grows, it is crucial that we address these challenges to ensure their implementation in a way that is acceptable, ethical, and transparent.

Foundation Models: Charting the Course for Tomorrow’s AI

The rise of foundation models signifies a transformative shift in the universe of AI and NLP. No longer a fleeting phase, these models have carved a benchmark for the depths machines can delve into when interpreting and engaging with the human lexicon. As we sharpen and mold these constructs, they’re poised to be more than just a fleeting digital footprint; they will shape our very interaction with the digital realm.

The trajectory of natural language processing, steered by the inception of foundation models, paints a vision of a world where AI transitions from being a mere instrument to a dynamic ally. An ally with the prowess to grasp, evolve, and make informed decisions. This metamorphosis underscores AI’s transformative essence, propelling us to a horizon where the alliance between humans and computers is real and palpable.

Conclusion

In conclusion, as we stand at the precipice of a dynamic new era in artificial intelligence, foundation models serve as torchbearers. They are ushering in a period of exponential growth and transformation, while also leaving us with pertinent questions to ponder and challenges to overcome. The promise of an AI-infused future that is more linked and sophisticated than we ever thought conceivable is encapsulated in these models, and with it, the creativity of human innovation. However, it is important to proceed cautiously and keep an eye out for possible risks along the way, all while maintaining a firm dedication to upholding the highest standards of ethics, transparency, and data protection. With foundational models, we have only just begun to investigate the vast landscapes of future possibilities.